On September 9, 2024, the National Technical Committee 260 on Cybersecurity (SAC/TC260) officially released the Artificial Intelligence Safety Governance Framework (hereinafter referred to as the Framework). This Framework serves as a comprehensive guide for establishing an all-encompassing, full-lifecycle governance system for AI development, ensuring its healthy growth and regulated application. The Framework aligns with China’s approach toward AI, namely the “people-centered approach” and its vision of “AI for good”. The Framework supports the implementation of the Global AI Governance Initiative proposed by China.

Key Components of the AI Safety Governance Framework

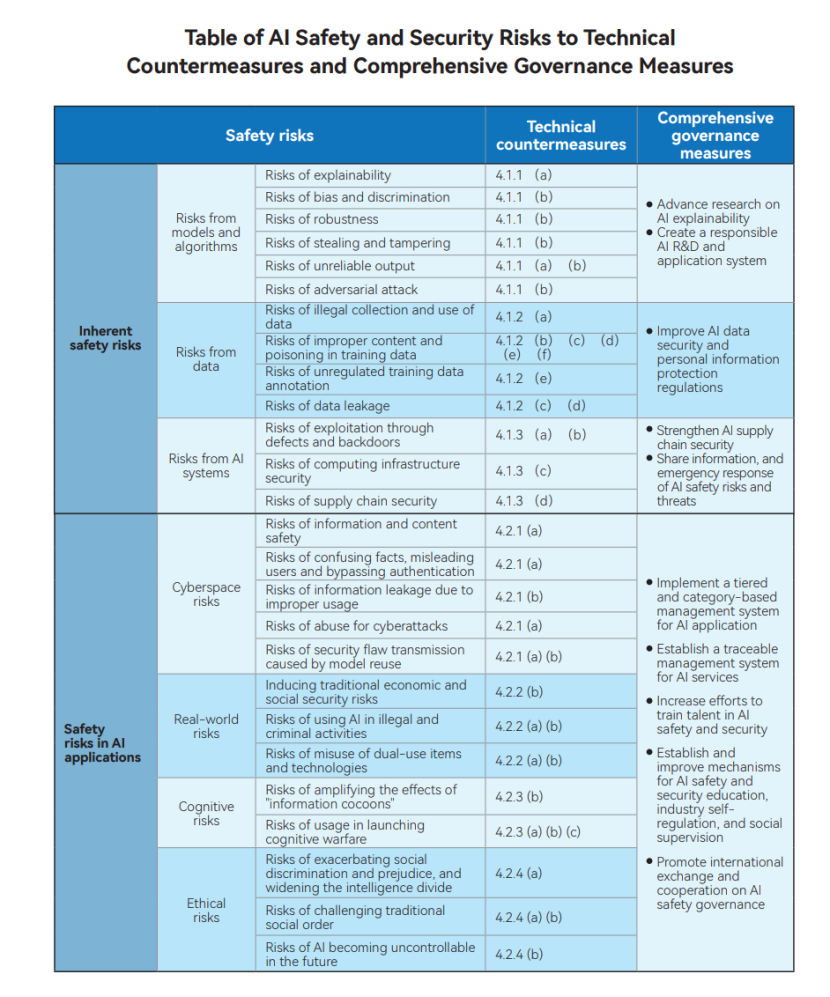

The Framework is structured into four sections: governance principles, safety risks, technical measures, and safety guidelines.

- Governance Principles: The Framework outlinesfour key principles forAI safety governance, namely: be inclusive and prudent; identify risks with agile governance; integrate technology and management for a coordinated response; and promote openness and cooperation for joint governance and shared benefits. These principles highlight collaboration, open governance, and shared responsibility, offering a holistic approach to AI safety.

- Safety Risks:

- Inherent Safety Risks: These stem from AI’s inherent technological flaws, such as biases, poisoned training data, and data leakage.

- Safety Risks in AI Applications: These result from improper or malicious use of AI, including its role in illegal activities, the amplification of “information cocoons”, and the creation of fake news.

- Technical Measures:

- For internal risks related to models, data, and systems, the Framework suggests technical measures such assecure development, content identification, and responsible R&D systems. It also emphasizes comprehensive governance solutions, including supply chain security.

- For application risks in areas like network security, ethics, and cognition, it proposes measures such as security safeguards, end-use tracking, and tiered management systems. Additionally, talent cultivation and classification of AI-related activities are essential to mitigatingthese risks.

- Safety Guidelines: The Framework provides specific safety guidelines for various stakeholders, including model developers, service providers, and users in key sectors. These guidelines ensure that all parties involved in AI development and application adhere tostandardized safety practices.

The Role of Standardization

The development and implementation of AI safety standards are crucial to the Framework’s success. This includes the creation of both a standard system and essential standards. According to an update presented by SAC/TC260 secretariat during the Cybersecurity Standard Week in June 2024, SAC/TC260 has initiated the drafting of a standard system for AI safety. While three rounds of public comments have been completed, the standard system remains in its preliminary stage. Therefore, SAC/TC260 encourages the industry to actively contribute and provide input to identify the most urgent standards.

In terms of essential standards, four standards are currently at the stage of public solicitation:

- Basic security requirements for generative artificial intelligence services

- Generative artificial intelligence data annotation security specifications

- Security specificationsfor generative artificial intelligence pre-training and fine-tuning data.

- Labeling methodsfor content generated by artificial intelligence

Additionally, research on AI safety standards in specific sectors such as finance, energy, telecommunications, transportation, and public services is on the agenda of Chinese standard development organizations. The aim is to ensure that AI applications across industries are secure and that risks are effectively mitigated.

In general, the Framework is designed as a neutral and highly adaptable tool that can be tailored to the needs of different nations and organizations. By offering a broad, actionable “toolbox”, it positions itself as a practical guide for AI safety governance, making it well-suited for international adoption and collaboration. It is expected that, in the coming months, newly-developed Chinese standards largely will follow the basic elements presented in the Framework. At the same time, as China continues to develop AI-specific legislation, it is likely that this Framework will be referred to as a key resources.

For the English version of the Framework, please click here to download: TC260 AI safety governance framework 1.0 (2024).