On April 28, 2024, the Secretariat of SAC/TC 260 Cybersecurity hosted an information session for industry actors on three national standards on generative artificial intelligence (AI) security, aimed at encouraging their participation in pilot trials. Below are introductions to each of the standards:

GB/T XXXXX-XXXX Basic security requirements for generative artificial intelligence services

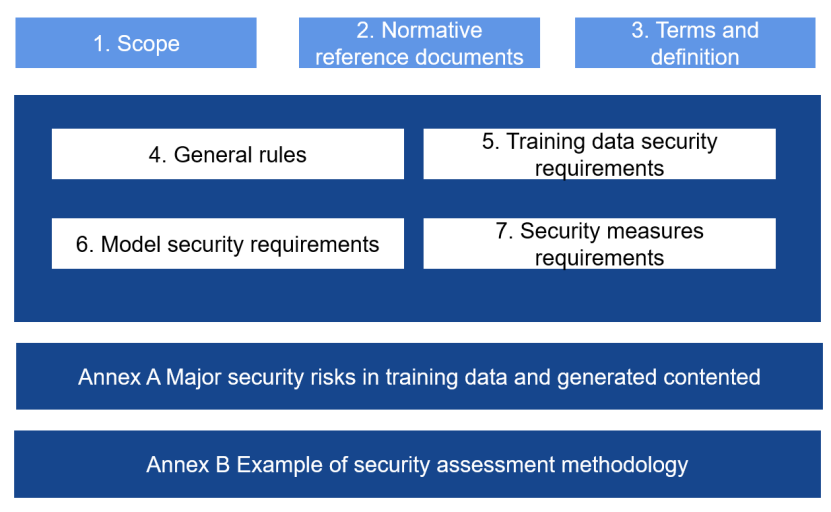

This standard represents an upgrade from its preceding technical document, which was released on March 4, 2024. The Basic Requirements delineate the security requirements applicable to generative AI service providers, encompassing requirements of training data security, model security, and overall security measures. Notably, these requirements complement the Interim Measures for the Management of Generative Artificial Intelligence Services (hereinafter referred to as the “Interim Measures”), providing a framework for security assessment as outlined in Article 17 of the Interim Measures. Furthermore, when undergoing algorithm filing procedures, generative AI service providers must conduct security assessment in line with the Basic Requirements, subsequently submitting assessment reports to the appropriate regulatory authority.

Figure 1. Chapter and Framework of the National Standard of Basic Security for Generative Artificial Intelligence Service

During the meeting, the Secretariat updated participants on recent developments regarding the drafting process, highlighting the most significant changes in the latest draft. While the draft for public comments (published on May 23, 2024) do not present major changes, pilot trials are scheduled to proceed, relying on enterprise self-assessment and guidance from the technical support group. The latter will coordinate training sessions, facilitate Q&A sessions, and provide additional support as needed.

GB/T XXXXX-XXXX Cybersecurity technology – Generative artificial intelligence data annotation security specification

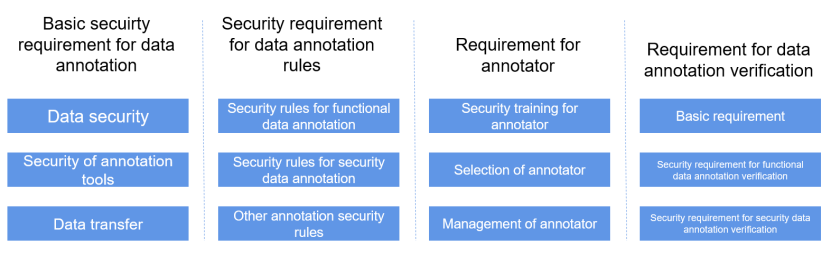

With the rise of generative AI, China has prioritized the security and reliability of AI-generated content, recognizing the pivotal role of data annotation in shaping output quality. Aligning with the annotation provisions outlined in the Interim Measures for the Management of Generative Artificial Intelligence Services (Articles 8 and 19), the standard delineates actionable measures for annotation service providers. Specifically, it defines security requirements for generative AI data annotation, as depicted in Figure 2. Currently open for public comment until June 2, 2024, the standard draws upon GB/T 42755-2023 Artificial Intelligence – Code of Practice for Data Labeling of Machine Learning in establishing its foundational processes.

Moreover, the lead drafter unveiled future revision plans (though not imminent), including:

- Expanding the standard’s applicability, from annotators to dataset creators.

- Clarifying the standard’s relationship with the other two national generative AI standards.

- Introducing requirements regarding the sourcing of data.

- Enhancing verification methodologies and adjusting them to align with the expanded scope of application.

Figure 2 Security Framework for Generative AI Data Annotation

GB/T XXXXX-XXXX Cybersecurity technology – Security specification for generative artificial intelligence pre-training and fine-tuning data

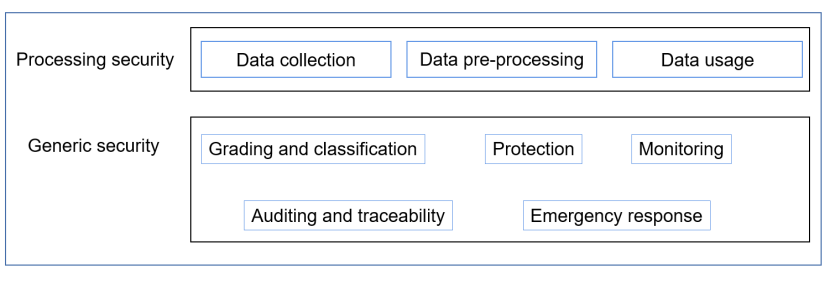

Similar to the other two standards, this standard aligns with the data training requirements outlined in the Interim Measures for the Management of Generative Artificial Intelligence Services (Article 7). By addressing “data general security” and “data processing security”, it delineates security protection requirements for data processing involved in pre-training and fine-tuning for developers of generative AI services. This aims to mitigate security threats like data poisoning and malicious prompts. Open for public comment until June 2, 2024, the standard aims to safeguard the security of generative AI services, mitigate risks to intellectual property rights and personal information, and foster healthy competition in the generative AI sector.

Figure 3 Framework for Pre-training and Fine-tuning Data Security for Generative AI

In summary, the three national standards reflect TC260’s commitment to bolstering the implementation of the Interim Measures for the Management of Generative Artificial Intelligence Services. While the Basic Requirements cover the entire model lifecycle, the other two standards focus solely on model design and development; the distinction between the latter two lies in their emphasis: one on data processing, and the other on the annotation process. Pilot trials for implementing all three standards are underway, expected to unveil operational challenges that will inform future upgrades and revisions.